Mastering Document Comparison: Key Insights So Far

Team IntelliNews is a collaboration between GMA Network (Philippines) and Helsingin Sanomat (Finland) as part of the 2024 JournalismAI Fellowship. Journalists and technologists from both organisations proposed to develop a document comparison tool, which apart from the English language, also focuses on the national and regional languages in Finland and the Philippines. Here, they discuss their progress mid-way through the Fellowship.

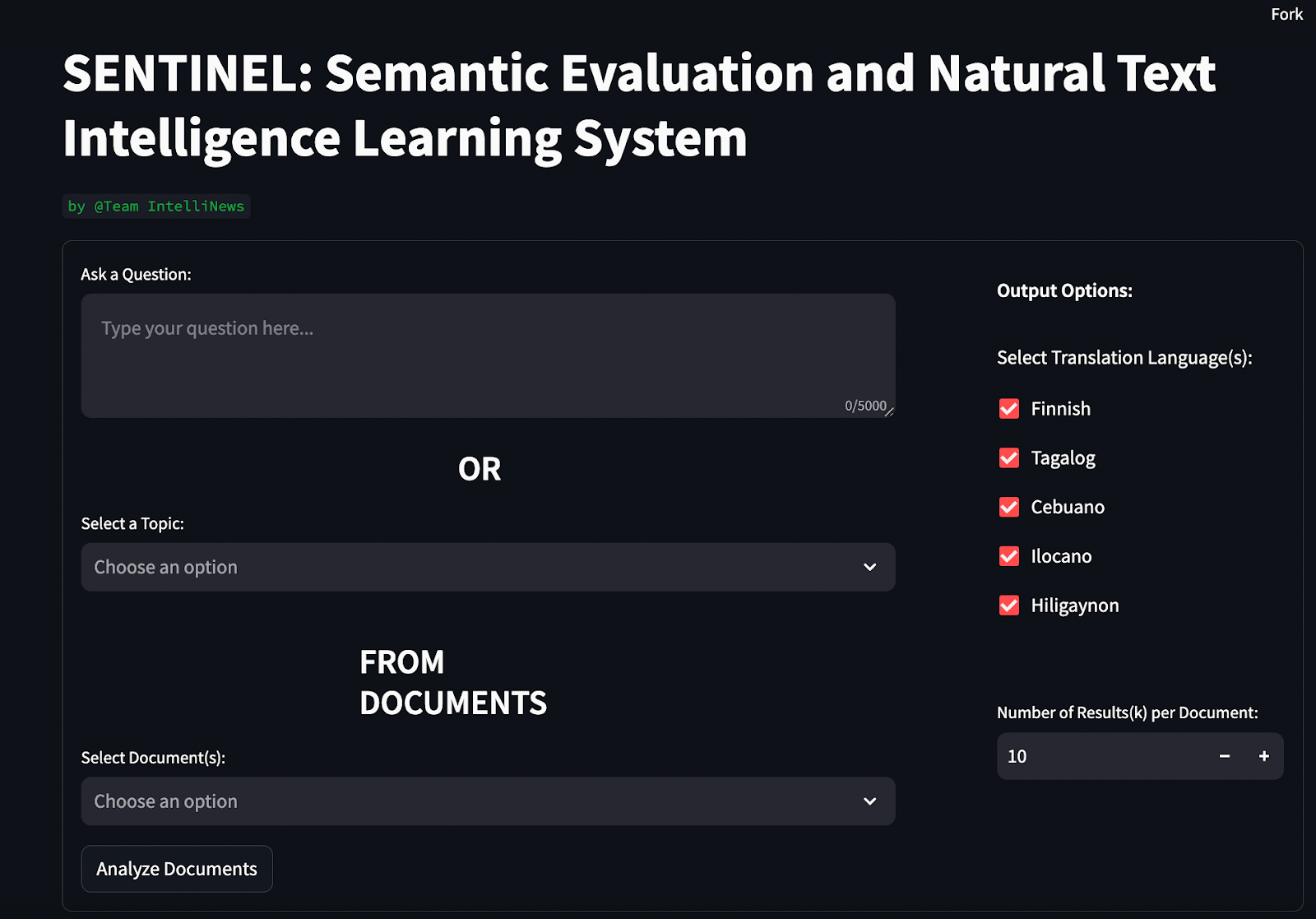

SENTINEL (Semantic Evaluation and Natural Text Intelligence Learning System) is a cutting-edge document comparison tool designed to help journalists quickly analyze and compare large sets of documents. It can be used e.g. for comparing political party election programs against government coalition agreements to see whose messages prevail, or analyzing stakeholder comments on legislative proposals to identify supporters and opponents along with their reasoning.

Low-res prototype of the SENTINEL

How the SENTINEL Works

The SENTINEL operates by leveraging a combination of vector indexing, similarity searches, and large language models (LLMs) to retrieve and compare document chunks. Here’s a brief rundown of the process:

Document Ingestion: Multiple documents are ingested into the system.

Vector Indexing: The documents are broken down into smaller chunks, which are then indexed as vectors.

Querying: A query is made to the system.

Similarity Search: The system performs a similarity search to retrieve the most relevant chunks from the documents based on the query.

Custom Models: Some custom similarities and differences models may be applied to refine the search.

Output Generation: The relevant chunks are retrieved and presented, allowing for a detailed comparison.

Working with an International Team

Collaborating with an international team has been a remarkable experience. It has allowed us to exchange diverse perspectives and methodologies, enriching the development process. Moreover, it has been inspiring to see the innovative approaches taken by other teams within the fellowship.

Key Learnings

The first half of the fellowship has provided invaluable insights. Here are some of the key learnings:

Defining the Problem Precisely: It is crucial to clearly define the problem our tool aims to solve. "Document comparison" sounds simple, but specifying exactly what we want to compare and how we convey that to the AI is essential.

Handling GenAI's Tendencies: Generative AI often has a propensity to please and view things positively. Training it to understand politically nuanced language, where something might be nominally supported but effectively delayed or compared unfavorably with other parties, is challenging.

Collaboration on GitHub: Being spread across continents necessitates strict guidelines for code development. We’ve planned to use GitHub repositories with pull requests, code reviews, and branches.

Importance of Testing: Creating precise testing sets is vital. This involves manually reading documents, devising test questions, identifying relevant excerpts, and forming optimal responses. This doesn’t end here; we must test by querying the tool and manually checking if it finds the predefined excerpts, documenting everything systematically in an Excel sheet.

User feedback is key: Allowing end users to test the low-res prototype provided us with much needed insights into how we could further improve the usability and design of our tool. For example, user feedback helped us identify which features on our prototype we could do without or we need to retain, allowing us to create a more functional document comparison tool.

Dealing with Multiple Languages: Working with languages other than English has proven to be complex sometimes, as expected. For example, off-the-shelf solutions for entity recognition often don’t work well in other languages. Fortunately, this might not be necessary for the final product. On the other hand, vectorization has performed surprisingly well, as the tool successfully finds relevant excerpts e.g. Finnish texts. Next, we will work on ensuring that the results are generated in the desired language.

Conclusion So Far

The journey so far has been very rewarding. Each challenge we’ve encountered has taught us something new and brought us closer to creating a powerful tool for comparing and analyzing documents. We look forward to the second half of the fellowship and are confident that the skills and knowledge we've gained will help us continue to improve.

To learn more about team IntelliNews’ work or potentially collaborate with the team, email us at lakshmi@journalismai.info. The 2024 JournalismAI Fellowship brought together 40 journalists and technologists to collaboratively work on using AI to improve journalism, its systems and processes. Read more about it here.